Running Batch Jobs

This section describes the batch processing environment in our facilities.

What is Batch Processing?

Batch processing is a procedure by which you submit a program for delayed execution. Batch processing enables you to perform multiple commands and functions without waiting for results from one command to begin another, and to execute these processes without your attendance. The terms process and job are interchangeable.

The batch processing system at HMDC runs on a high throughput cluster on which you can perform extensive, time-consuming calculations without the technical limitations imposed by a typical workstation.

Why Use Batch Processing?

HMDC provides a large, powerful pool of computers that are available for you to use to conduct research. This pool is extremely useful for the following applications:

-

Jobs that run for a long time - You can submit a batch processing job that executes for days or weeks and does not tie up your RCE session during that time. In fact a user does not need to run a RCE desktop session to submit a batch process. Batch jobs can be submitted from command-line via ssh.

-

Jobs that are too big to run on your desktop - You can submit batch processing that requires more infrastructure than your workstation provides. For example, you could use a dataset that is larger in size than the memory on your workstation.

-

Groups of dozens or hundreds of jobs that are similar - You can submit batch processing that entails multiple uses of the same program with different parameters or input data. Examples of these types of submission are simulations, sensitivity analysis, or parameterization studies.

If you are interested in learning more about our batch cluster resource manager continue reading below. For those that want to move on and learn how to submit a batch job please click the Batch Basics link on the left side menu.

Condor System for Batch Processing

The Condor system enables you to submit a program for execution as batch processing, which then does not require your attention until processing is complete. The Condor project website is located at the following URL:

http://www.cs.wisc.edu/condor/

To view the user manual for this software, go to the following URL and choose a viewing option:

http://www.cs.wisc.edu/condor/manual/

Condor System Components and Terminology

A Condor system comprises a central manager and a pool. A Condor central manager machine manages the execution of all jobs that you submit as batch processing. An associated pool of Condor machines associated with that central manager execute individual processes based on policies defined for each pool member. If a computing installation has multiple Condor pools or additional machine clusters dedicated to Condor system use, these pools and clusters can be associated as a flock.

Listed below are some common Condor terms and references, which are unique to Condor:

-

Cluster - A group of jobs or processes submitted together to Condor for batch processing is known as a cluster. Each job has a unique job identifier in a cluster, but shares a common cluster identifier.

-

Pool - A Condor pool comprises a single machine serving as a central manager, and an arbitrary number of machines that have joined the pool. Simply put, the pool is a collection of resources (machines) and resource requests (jobs).

-

Jobs - In a Condor system, jobs are unique processes submitted to a pool for execution and are tracked with a unique process ID number.

-

Flock - A Condor flock is a collection of Condor pools and clusters associated for managing jobs and clusters with varying priorities. A Condor flock functions in the same manner as a pool, but provides greater processing power.

When you submit batch processing to the Condor system, you use a submit description file (or submit file) to describe your jobs. This file results in aClassAd for each job, which defines requirements and preferences for running that job. Each pool machine has a description of what job requirements and preferences that machine can run, called the machine ClassAd. The central manager matches job ClassAds with pool machine ClassAds to select the machine on which to execute a job.

Process Identification Numbers

For Condor batch processing, there are two identification numbers that are important to you:

-

Cluster number - The cluster number represents each set of executable jobs submitted to the Condor system. It is a cluster of jobs, or processes. A cluster can consist of a single job.

-

Process number - The process number represents each individual job (process) within a single cluster. Process numbers for a cluster always start at zero.

Each single job in a cluster is assigned a process identification number, called the process ID or job ID. This ID consists of both cluster and process number in the form <cluster>.<process>.

For example, if you submit a batch that consists of a single job, and your batch submission to the Condor queue is assigned cluster number 20, then your process ID is 20.0. If you submit a batch that consists of fifteen jobs that all use the same executable, and your batch submission to the Condor queue is assigned cluster number 8, then your process IDs range from 8.0 to 8.14.

Batch Basics

Batch Processing Terminology

These terms are specific to HTCondor, our batch processing scheduler:

- Node - A processor (or set of processors) capable of running a job

- Pool - A collection of nodes

- Job - A single process with an executable, arguments, input, output and error files

- Cluster - A collection of jobs that share common executables and/or input files

- Queue - The list of jobs that have been submitted to run on the pool

- Scheduler - A process responsible for determining which jobs in the queue are run next

Batch Processing Utilities

- condor_status - shows the status of all of the nodes in the pool

- condor_q - shows the status of all jobs in the queue

- condor_submit - submit a cluster of jobs to the queue

- condor_submit_util - RCE helper application that automates the submission process (should use in place of condor_submit in most cases)

- condor_userprio - shows usage statistics and priorities for users who are actively using pool resources

Batch workflow

The workflow to submit batch processing to the Condor system is as follows:

-

Create a directory in which to submit jobs to the Condor system.

Make sure that the directory and files with which you plan to work are readable and writable by other users, which include Condor processes.

For example, type the following:

mkdir condor cd condor

You can request that a project directory be set up for you to use for batch processing. If you perform you batch processing within your home directory, the space used for your data and program files can consume much of your allotted resources, and this can cause problems with logging into the system, so working in a project space is recommended. For more information on project spaces go to our Projects and Shared Space page.

-

Choose an execution environment, called a universe, for your jobs.

At HMDC, you always use the

vanillauniverse. This execution environment supports processing of individual serial jobs, but has few other restrictions on the types of jobs that you can execute. -

Make your jobs batch ready.

Batch processing runs in the background, meaning that you cannot input to your executable interactively. You must create a program or script that reads in your inputs from a file, and writes out your outputs to another file.

You also must identify the full path and executable source to use for your Condor cluster. The default executable for the condor_submit_util script is the R language. In the RCE, the path and executable source for this language is

/usr/bin/R. Any command line application or program can be submitted as a batch job (Matlab, Stata, Python, etc) -

If you choose to use the

condor_submit_utilscript to create the submit description file (or submit file) and submit your jobs to the Condor system for batch processing automatically, skip to step the next step.If you choose to submit your batch processing to the Condor system manually, create a submit file.

A submit file is a plain-text file that describes a batch of jobs for the Condor software. This file contains the following descriptors:

-

Environment (

vanilla) -

Executable program path and file name

-

Program arguments (properly quoted -- see manual)

-

Input and output file names

-

Log and error file names

Here is an example of a basic submit file:

Universe = vanilla Executable = /usr/bin/R Arguments = --no-save --no-restore should_transfer_files = NO Requirements = Memory >= 32 output = $HOME/mybatchjob/output.txt error = $HOME/mybatchjob/error.txt Log = $HOME/mybatchjob/log.txt Queue 1

-

-

Execute the

condor_submit_utilcommand to write the submit file and submit your program automatically to the Condor job queue.If you chose to write your own submit file, execute the

condor_submit <submit file>.submitcommand to submit your jobs to the queue.Condor then checks the submit file for errors, creates a

ClassAdobject and the object attributes for that cluster, and then places this object in the queue for processing.

Determining batch parameters

Before you submit your program for batch processing, you need to determine the parameters for this submission. To use the Condor system for batch processing, you must define these parameters by assigning values to submit file arguments, which describe the jobs that you choose to submit for processing.

In the RCE, you always use the vanilla environment.

To determine the remaining submit file arguments, answer the following questions:

-

What is the executable path and file name?

For any shell script or statistical application installed in the RCE, the

condor_submit_utilscript can determine the full path for the executable. At the script prompt, you type in the name of your script, program, or application. The default executable in the RCE is the R language, and the path and executable name are/usr/bin/R. Any command line applications or programs can be used for batch processing, including Matlab, Stata, Python, Perl, etc. -

Do you have any arguments to supply to the executable?

Arguments are parameters that you specify for your executable. For example, the default arguments in the

condor_submit_utilscript are--no-saveand--vanilla, which specify how to launch and exit the R program. The argument--no-savespecifies not to save the R workspace at exit. The argument--vanillainstructs R to not read any user or site profiles or restored data at start up and to not save data files at exit. -

What are the input file names?

If you are using the R program, your input file(s) will be whatever R script you want to execute.

-

What do you plan to name the output files?

A general rule for batch processing is that you have one output file for each input file. Therefore, if you have seven input files, you expect to have seven output files after processing is complete. A useful practice is to correlate the names of input and output files.

-

How many times do you need to execute this script or program?

A general rule for batch processing is that you execute your job one time for each input file that you use.

Download Batch Example

To set up our batch processing example for use, you first download the source material, and then determine your batch processing parameters.

Downloading the Source Files

To download the source files for use in this example:

-

Log in to your RCE session.

-

Open this page in a web browser in your RCE session.

-

Click the file

condor_example.tar.gz(see below) to download it. -

Click the Save to Disk option, and then click OK to save the

tarfile to your desktop. -

Open a terminal window, and unzip the

tarfile in theDesktopdirectory. Type:tar zxvf Desktop/condor_example.tar.gz

The contents of the uncompressed example file will look like this:

condor_example/

condor_example/condor_submit_util/

condor_example/bootstrap.R

You now have a directory named condor_example in your home directory, which contains the files necessary to run our example.

| condor_example.tar.gz | 667 bytes |

Using condor_submit_util (recommended for new users)

After you set up your working directory and define your batch processing parameters, you can submit your script and input files for processing. You can use the condor_submit_util to set up your submit file and submit your batch or you can create your submit file with the condor_submit utility and submit to the cluster. If you are new to batch processing with HTCondor, we recommend using the condor_submit_util.

To build a submit file automatically and submit your program for batch processing, you can use the Automated Condor Submission script (aka condor_submit_util) in two modes: interactive or command line.

Note: If you do not specify any options when you use condor_submit_util, it enters interactive mode automatically. Also, if you do not specify required options when you use condor_submit_util in command-line mode, the script enters interactive mode automatically, or it reports an error and returns you to the command-line prompt.

For examples of using condor_submit_util, see Other Batch Examples.

Working interactively with condor_submit_util

When you use the script in interactive mode, you can press the Return key to accept default values. Default values are specified in the prompts inside square brackets, and appear at the end of the prompt.

To use the condor_submit_util script in interactive mode:

-

Execute the

condor_submit_utilcommand.Type the following at the command prompt in your Condor working directory:

> condor_submit_util

*** No arguments specified, defaulting to interactive mode...

*** Entering interactive mode.

*** Press return to use default value.

*** Some options allow the use of '--' to unset the value. -

The script first prompts you to define the executable program that you choose to submit for batch processing, and then requests the list of arguments to provide to that executable:

Enter executable to submit [/usr/bin/R]: <executable name>

Enter arguments to /usr/bin/R [--no-save --vanilla]: <arguments>The default argument

--no-savespecifies not to save the R workspace at exit. The default argument--vanillainstructs R to not read any user or site profiles or restored data at start up and to not save data files at exit.If you do not have any arguments to apply to your executable, then type -- to supply no arguments.

-

Next, the script prompts you to provide a name or pattern for the input, output, log, and error files for this Condor cluster submission. You can include a relative path in these entries, if you choose:

Enter input file base [in]: <input path and file name or pattern>

Enter output file base [out]: <output path and file name or pattern>

Enter log file base [log]: <log path and file name or pattern>

Enter error file base [error]: <error path and file name or pattern>Note, if using the batch example the input file is bootstrap.R

-

After specifying the files, the script prompts you to define the number of iterations that you choose to execute your program for processing:

Enter number of iterations [10]: <integer>

-

The system creates the submit file for this batch process using your responses to script prompts.

An example submit file is shown here. To view the contents of your submit file, include the option

-v(verbose) when you launch thecondor_submit_utilscript:*** creating submit file '<login account name>-<date-time>.submit'

Universe = vanilla

Executable = /usr/bin/R

Arguments = --no-save --vanilla

when_to_transfer_output = ON_EXIT_OR_EVICT

transfer_output_files = <output file>

input = <input file>

output = <output file>

error = <error file>

Log = <log file>

Queue <integer> -

If you use the verbose option, the script prompts you to confirm that the submit file is correct. To continue, press Return or type y.

Condor checks the submit file for errors, creates the

ClassAdobject for your submission, and adds that object to the end of the queue for processing. The script lists messages that report this progress in your terminal window, and includes the cluster number assigned to the batch process. For example:Is this correct? (Enter y or n) [yes]: y

] submitting job to condor...

] removing submit file '<login account name>-<date-time>'

*** Job successfully submitted to cluster <cluster ID>. -

Finally, the script prompts whether you choose to receive email when execution of your batch processing is complete. Press Return or type y to receive email, or type n to not send email and exit the script.

If you choose to receive email, before exiting, the script prompts you to enter the email address to which you choose to send the notification. The default email address for notification is your email account on the server on which you launched the script. For example:

Would you like to be notified when your jobs complete? (Enter y or n)

[yes]: y

Please enter your email address [<your email account on this server>]:

*** creating watch file '/nfs/fs1/projects/condor_watch/<Condor machine>.<batch cluster>.<your email>' -

View your job queue to ensure that your batch processing begins execution successfully.

See for complete details about checking the queue. An example is:

> condor_q

-- Submitter: vnc.hmdc.harvard.edu : <10.0.0.47:60603> : vnc.hmdc.harvard.edu

IDOWNER SUBMITTED RUN_TIME STPRISIZECMD

9.0arose10/4 11:02 0+00:00:00 R 0 9.8 dwarves.pl

9.1arose10/4 11:02 0+00:00:00 R0 9.8 dwarves.pl

9.2arose10/4 11:02 0+00:00:00 I 0 9.8 dwarves.pl

9.3arose10/4 11:02 0+00:00:00 R 0 9.8 dwarves.pl

4 jobs; 1 idle, 3 running, 0 held

Working with command arguments to condor_submit_util

When you use the script in command-line mode, you must specify all required options or the script does not execute. For example, the default number of iterations for the script is 10. If you do not have 10 input files in your working directory and you do not enter the option to specify the correct number of iterations that you plan to perform, the script does not execute and returns a message similar to the following:

> condor_submit_util -v

*** Fatal error; exiting script

*** Reason: could not find input file 'in.7'.

To use the condor_submit_util script in command-line mode:

-

Execute the

condor_submit_utilcommand with the appropriate arguments. See for detailed information about script options.At a minimum, you must include the following options on the command line:

-

Executable program file name

-

Executable file arguments, or

--noargsoption -

Input file, or

--noinputoption -

Number of iterations, if you do not have 10 input files

At a minimum, type the following at the command prompt from within your Condor working directory:

> condor_submit_util -x <program> -a <arguments> -i <input files>

-

-

Condor creates a submit file and checks it for errors, creates the

ClassAdobject, and adds that object to the end of the queue for processing. The script supplies messages that report this progress, and includes the cluster number assigned to your Condor cluster. For example:> condor_submit_util -x <program> --noargs

Submitting job(s)..........

Logging submit event(s)..........

10 job(s) submitted to cluster 24.If the script encounters a problem when creating the submit file, it enters interactive mode automatically and prompts you for the correct inputs.

-

View your job queue to ensure that your batch processing begins execution.

See for complete details about checking the queue.

Passing Arguments to the Program

You can pass arguments to the batch program using the --args flag in your submit file. For example, if you change the arguments line in your submit file to something like the following:

Arguments = --no-save --vanilla --args <arguments>

Then the contents of <arguments> will be passed in to the program as command-line arguments. The syntax for passing and handling these arguments differs depending on the statistics program in use.

Passing Arguments to R

To parse command-line arguments in R, use the following command in your R script:

args <- commandArgs(TRUE)

This puts the command-line arguments (the contents of <arguments>) into the variable args.

Script options

The condor_submit_util makes the task of running jobs using the batch servers easier and more intuitive. condor_submit_util negotiates all job scheduling; it constructs the appropriate submit file for your job, and calls the condor_submit function. To use this utility you need a program to run. The format for using this script is:

condor_submit_util [OPTIONS]

In addition, the script can notify you when your job is done via email so you do not have to check the queue constantly using condor_q. In future releases, the script also will be able to keep usage data so administrators can track overall performance.

The script can be run in two ways, interactively or from the command line. When running interactively, the script prompts you for the values required to run the batch job. If you supply arguments on the command line, these arguments are used in addition to default values for any values you do not supply.

Options

-h, --help

Print help page and exit.-V, --version

Print version information and exit.-v, --verbose

Show information about what goes on during script execution.-I, --Interactive

Enter interactive mode, in which the script prompts you for the required values.-s, --submitfile FILE

Specify the name of the created submit file (default is<user-name-datetime>.submit).-k, --keep

Do not delete the created submit file.-N, --Notify

Receive notification by email when jobs are complete.-x, --executable FILE

The executable for condor to run (default is/usr/bin/R).-a, --arguments ARGS

Any arguments you want to pass to the executable (should be quoted, default is"--no-save --vanilla").-i, --input [FILE|PATT]

Either an explicit file name or base name of input files to the executable (default isin).-o, --output [PATT]

Base name of output files for the executable (default isout).-e, --error [PATT]

Base name of error files for the executable (default iserror).-l, --log [PATT]

Base name of log files for the executable (default islog).-n, --iterations NUM

Number of iterations to submit (default is10).-f, --force

Overwrite any existing files.--noinput

Use no input file for executable.--noargs

Send no arguments to executable.

Examples

-

You have a compiled executable (named foo) that takes a data set and does some analysis. You have five different data sets to run against (named data.0, data.1 ... data.4). You want to save the submit file and be notified when the job is done.

condor_submit_util -x foo -i "data" -k -N

-

You have an R program that has some random output. You want to run it 10 times to see the results.

condor_submit_util -i random.R -n 10

-

You have an R program that will take a long time to complete. You only need to run it once, but you want to be notified when it is done.

condor_submit_util -i long.R -n 1 -N

Notes: For -o, -e, and -l, these options are considered base names for the implied files. The actual file names are created with a numerical extension tied to its condor process number (0 indexed). This means that if you execute condor_submit_util -o "out" -n 3, three output files named out.0, out.1, and out.2 are created.

Also, for -i, the script first checks to see if the name supplied is an actual file on disk, if not it uses the argument as a base name, similar to -o, -e, and -i.

Option conventions

For most condor_submit_util options, there are two conventions that you can use to specify that option on the command line:

-

The

-<letter>convention - Use this simple convention as a short cut.For example, the simple option to receive email notification when your batch processing is complete is

-N. -

The

--<term>convention - Use this lengthy convention to make it easy to determine what option you use.For example, the lengthy option to receive email notification when your batch processing is complete is

--Notify.

Both conventions for specifying an option perform the same function. For example, to receive email notification when your batch processing is complete, the options -N and --Notify perform the same function.

Pattern Arguments

For file-related options, such as the output file name or the error file name, you can use a pattern-matching argument. For example, if you specify the option -i "run", Condor looks for an input file with the name run. If there is no file named run, Condor looks for a file name that begins with run., such as run.14.

If there are multiple files with names that begin with the pattern that you specify, then for the first execution within a cluster, Condor uses the file with the name that matches first in alphanumeric order. For successive executions within a cluster, Condor uses the files with names that match successively in alphanumeric order.

Saving and Reusing a Submit File

When you use condor_submit_util in command-line mode to submit a program for batch processing, include the option -k (keep) to save the submit file created by the utility.

You can edit and reuse that submit file to submit similar programs to the Condor queue for batch processing. You also can include Condor macros to further improve the usability of the file. See the HTCondor documentation for detailed information about how to use Condor macros.

For example, if you plan to submit several iterations of a program for batch processing, you can use a single submit file for all iterations. In that submit file, you use the $(PROCESS) macro to specify unique input, output, error, and log files for each iteration.

Use of the $(PROCESS) macro requires that you develop a naming convention for files or subdirectories that includes the full range of process IDs for your iterations.

To use an existing submit file when you submit a batch process, you cannot use the script and must execute the condor_submit command instead. Type the following:

condor_submit my.submit

Manual batch submit (only recommended for experienced users)

You use the command condor_submit to submit batch processing manually to the Condor system.

If you are new to batch processing please see the previous section using condor_submit_util

In the RCE, you must include the attribute Universe = vanilla in every submit file. If you do not include this statement, Condor attempts to enable job-check pointing, which consumes the central manager resource.

Perform the following to submit batch processing manually:

-

Before you submit your program for batch processing, create a directory in which to run your submission, and then change to that directory. Make sure that you set permissions to enable the Condor software to read from and write to the directory and its contents.

Also make sure that your program is batch ready.

-

Create a submit file for your program.

For information about how to create a submit file, see Submit file basics.

Note: You can use the HMDC Automated Condor Submission script and include the

-koption to create a submit file, and then edit and reuse that submit file for other submissions. -

Submit your program for batch processing.

Type the following at the command prompt:

condor_submit <submit file>

Condor then checks the submit file for errors, creates the

ClassAdobject, and places that object in the queue for processing. New jobs are added to the end of the queue. For example:condor_submit myjob.submit

Submitting job(s)..........

Logging submit event(s)..........

10 job(s) submitted to cluster 24. -

View your job queue to ensure that execution begins.

condor_q <username>

For example:condor_q wharrell

Submit file basics

You send input to the Condor system using a submit file, which is a text file of <attribute> = <value> pairs. The naming convention for a submit file is <file name>.submit. Before you submit any batch processing, you first set up a directory in which to work, and create the executable script or program that you choose to submit for processing.

Basic attributes used in the submit file include the following:

-

-

Universe- At HMDC you specify thevanillauniverse, which supports serial job processing. HMDC does not support use of other Condor environments. -

Executable- Type the name of your program. In the jobClassAd, this becomes theCmdvalue. The default value in the RCE for this attribute is the R program. -

Arguments- Include any arguments required as parameters for your program. When your program is executed, the Condor software issues the string assigned to this attribute as a command-line argument. In the RCE, the default arguments for the R program are--no-saveand--vanilla. -

Input- Type the name of the file or the base name of multiple files that contain inputs for your executable program. -

Output- Type the name of the file or the base name of multiple files in which Condor can place the output from your batch job. -

Log- Type the name of the file or the base name of multiple files in which Condor can record information about your job's execution. -

Error- Type the name of the file or the base name of multiple files in which Condor can record errors from your job. -

Queue- The commandqueueinstructs the Condor system to submit one set of program, attributes, and input file for processing. You use this command one time for each input file that you choose to submit. (Note: this keyword should be specified without an equals sign, e.g. "Queue 10".) -

Request_Cpus- The number of physical CPU cores required to run each instance of the job, as an integer. -

Request_Memory- The amount of physical memory (RAM) required to run each instance of the job, in MB. Your job may also have access to additional system swap memory if available, but this value guarantees a minimum amount of available system main memory for your job.

-

The full documentation of all available submit file options can be found at http://research.cs.wisc.edu/htcondor/manual/current/condor_submit.html

An example submit file with the minimum required arguments is as follows:

cat myjob.submit Universe = vanilla Executable = /usr/bin/R Arguments = --no-save --vanilla input = <program>.R output = out.$(Process) error = error.$(Process) Log = log.$(Process) Request_Cpus = 2 Request_Memory = 4096 Queue 10

This file instructs Condor to execute ten R jobs using one input program (<program>.R) and to write unique output files to the current directory. Each job process will run with access to 2 CPU cores and 4GB of RAM.

When you specify file-related attributes (executable, input, output, log, and error), either place those files within the directory from which you execute the Condor submission or include the relative path name of the files.

Managing your batch job

Once you have submitted your job(s) to the queue, you have various ways of checking in on the status of your jobs including e-mail notification of job completion and command line access to both your jobs status and the current state of the pool.

Managing Job Status

You can monitor progress of your batch processing using the condor_status and condor_q commands. This section describes how to check the status of your processes at any time, and how to remove a process from the Condor queue.

After you submit a job for processing, you can check the status of the Condor machine pool and verify that machines are available on which your jobs can execute.

To check the status of the Condor pool, type the command condor_status. This command returns information about the pool resources. Output lists the number of slots available in the pool and whether they are in use. If there are no idle slots, your batch processing is queued when it is submitted.

For example:

> condor_status

Name OpSys Arch State Activity LoadAv Mem ActvtyTime

vm1@mc-1-1.hm LINUX X86_64 Claimed Busy 1.060 19750+17:43:50

vm2@mc-1-1.hm LINUX X86_64 Claimed Busy 1.060 1975 0+17:43:48

vm1@mc-1-2.hm LINUX X86_64 Claimed Busy 1.000 1975 0+17:44:43

vm2@mc-1-2.hm LINUX X86_64 Claimed Busy 1.000 1975 0+17:44:36

vm1@mc-1-3.hm LINUX X86_64 Unclaimed Idle 0.010 1975 0+00:03:57

vm2@mc-1-3.hm LINUX X86_64 Unclaimed Idle 0.000 1975 0+00:00:04

vm1@mc-1-4.hm LINUX X86_64 Unclaimed Idle 0.000 1975 0+00:00:04

Total Owner Claimed Unclaimed Matched Preempting Backfill

X86_64/LINUX 7 0 4 3 0 0 0

Total 7 0 4 3 0 0 0

To check the cumulative use of resources within in the Condor pool, include the option -submitter with the command condor_status. This command returns information about each user in the Condor queue. Output lists the user's name, machine in use, and current number of jobs per machine. Use this command to help determine how many resources Condor has available to run your jobs. An example is shown here:

> condor_status -submitter

Name Machine Running IdleJobs HeldJobs

mkellerm@hmdc.harvar w4.hmdc.ha 2 0 0

jgreiner@hmdc.harvar x1.hmdc.ha 9 0 0

jgreiner@hmdc.harvar x3.hmdc.ha 40 0 0

kquinn@hmdc.harvard. x5.hmdc.ha 32 0 0

RunningJobs IdleJobs HeldJobs

jgreiner@hmdc.harvar 49 0 0

kquinn@hmdc.harvard. 32 0 0

mkellerm@hmdc.harvar 2 0 0

Total 83 0 0

Cluster Status Summary

To view a summary of the Condor cluster available resources, run: rce-info.shTo view a summary of the resources currently in use on the Condor cluster, run: rce-info.sh -t used

Removing your job

To remove a process from the queue, type the command condor_rm <cluster ID>.<process ID>. For example:

> condor_rm 9.9

Job 9.9 marked for removal

To find a list of your jobs type:

> condor_q $USER

To remove all jobs affiliated with a cluster, type the command condor_rm <cluster ID> . For example, the command condor_rm 4 removes all jobs assigned to cluster 4.

To remove all of your clusters' jobs from the Condor queue, type condor_rm -a. For example:

> condor_rm -a

All jobs marked for removal.

Jobs must be deleted from the host they were submitted from.

When you run condor_q you may see multiple "Schedd" sections:

-- Schedd: HMDC.rce@rce6-1.hmdc.harvard.edu

-- Schedd: HMDC.rce@rce6-2.hmdc.harvard.edu

-- Schedd: HMDC.rce@rce6-3.hmdc.harvard.edu

Each of these sections represents a different RCE Login server.

When you submit a job, the server you are logged in to is responsible for "scheduling" that job and keeping track of its status.

Each RCE Login server maintains this status separately, so when you want to remove a job, you must also specify the server where you started it.

The full syntax to remove a job is thus:

condor_rm <cluster ID>[.<process ID>] -name <schedd_string>

e.g.

condor_rm 4806 -name "HMDC.rce@rce6-4.hmdc.harvard.edu"

Other Batch Examples

We created the condor_submit_util script to automate the process of writing a submit file and submitting a cluster of jobs to the Condor queue. When you execute this script, you can include all arguments on the command line. Or, you can execute the script in interactive mode and be prompted for your submit file attributes.

The default settings for the Automated Condor Submission script support creation of submit files for programs that are written in the R language. To submit another type of program to the Condor queue, such as an Octave program, specify the full path and program for the executable (in this example, Octave). You then define your program file as the input to the executable.

Note: To use the condor_submit_util script, you must have an RCE account. See for more information.

The following are example uses of the condor_submit_util script and options to submit batch processing in the RCE. A complete description of options is provided in .

Example Using Multiple Input Files

Start with an executable program (named foo) that uses a set of input data files (named data0 - data4) and does some analysis.

To save the submit file and receive notification when processing is done, type the following command:

> condor_submit_util -x foo -i "data" -k -N

The submit file for this batch looks like this:

Universe = vanilla

Executable = /usr/bin/foo

Arguments = --no-save --vanilla

when_to_transfer_output = ON_EXIT_OR_EVICT

transfer_output_files = out.$(Process)

Notification = Complete

input = data.$(Process)

output = out.$(Process)

error = err.$(Process)

Log = log.$(Process)

Queue 5

Example Using Multiple Iterations of One Executable Program

An R program (named random.R) produces random output.

To execute this program eight times and place the output of each execution in separate files in your default working directory, type the following command:

> condor_submit_util -i random.R -n 8 -o "outrun"

Following is the submit file for this batch:

Universe = vanilla

Executable = /usr/bin/R

Arguments = --no-save --vanilla

when_to_transfer_output = ON_EXIT_OR_EVICT

transfer_output_files = outrun.$(Process)

input = random.R

output = outrun.$(Process)

error = error.$(Process)

Log = log.$(Process)

Queue 8

Example Checking Process Status

To check the status of the Condor queue after submitting your program for processing, type:

> condor_q

-- Submitter: x1.hmdc.harvard.edu : <10.0.0.47:60603> : x1.hmdc.harvard.edu

ID OWNER SUBMITTED RUN_TIME ST PRI SIZE CMD

24.4 mcox8/18 16:35 0+00:00:01 R 0 0.0 R --no-save--vani

24.5 mcox8/18 16:35 0+00:00:00 R 0 0.0 R --no-save--vani

24.6 mcox8/18 16:35 0+00:00:00 R 0 0.0 R --no-save--vani

24.7 mcox8/18 16:35 0+00:00:00 I 0 0.0 R --no-save--vani

24.8 mcox8/18 16:35 0+00:00:00 I 0 0.0 R --no-save--vani

24.9 mcox8/18 16:35 0+00:00:00 I 0 0.0 R --no-save--vani

6 jobs; 3 idle, 3 running, 0 held

The column ID lists the process IDs for your jobs. The column ST lists the status of each job in the Condor queue. A value of R indicates that the job is running. Valid status values are listed in .

Submit a batch job from a RCE Powered (Interactive) job

If you try to submit a batch job from within an RCE Powered (Interactive) job, you will encounter this error:

ERROR: Can't find address of local schedd

To work around this, you must include the name of the batch scheduler as an argument to condor_submit.

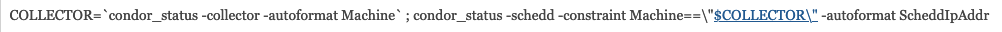

To submit a batch job from RCE Powered shell (or from within any RCE Powered application) you need to run this command to get the batch scheduler IP address and port number:

COLLECTOR=`condor_status -collector -autoformat Machine` ; condor_status -schedd -constraint Machine==\"$COLLECTOR\" -autoformat ScheddIpAddr

The output will look something like this <10.0.0.32:12345> Take this and add it as an argument to the condor_submit script's "-name" parameter:

condor_submit -name '<10.0.0.32:12345>' my-job.submit

For more information on using condor_submit, please see our documentation on Running Batch Jobs.

Troubleshooting Problems

The Condor central manager stops (evicts or preempts) a process for several reasons, including the following:

-

Another job or another user's job in the queue has a higher priority and preempts or evicts your job.

-

The pool machine on which your process is executed encounters an issue with the machine state or the machine policy.

-

You specified attributes in your submit file that cannot process without error.

Refer to the Condor manual for detailed information about submission, job status, and processing errors:

http://research.cs.wisc.edu/htcondor/manual/latest/2_Users_Manual.html

Note: A simple action can help you to diagnose problems if you submit multiple jobs to Condor. Be sure to specify unique file names for each job's output, history, error, and log files. If you do not specify unique file names for each submission, Condor overwrites existing files that have the same names. This can prevent you from locating information about problems that might occur.

Priorities and Preemption

Job priorities enable you to assign a priority level to each submitted Condor job. Job priorities, however, do not impact user priorities.

User priorities are linked to the allocation of Condor resources based upon a user's priority. A lower numerical value for user priority means higher priority, so a user with priority 5 is allocated more resources than a user with priority 50. You can view user priorities by using the condor_userprio command. For example:

> condor_userprio -allusers

Condor continuously calculates the share of available machines. For example, a user with a priority of 10 is allocated twice as many machines as a user with a priority of 20. New users begin with a priority of 0.5 and, based upon increased usage, their priority rating rises proportionately in relation to other users. Condor enforces this function such that each user gets a fair share of machines according to user priority and historical volume. For example, if a low-priority user is using all available machines and a higher-priority user submits a job, Condor immediately performs a checkpoint and vacates the jobs that belong to the lower-priority user, except for that user's last job.

User priority rating decreases over time and returns to a baseline of 0.5 as jobs are completed and idle time is realized relative to other users.

Process Tracking

To track progress of your processes:

Type condor_q to view the status of your process IDs.

-

Check your output directory for the time stamps of your output, log, and error files.

If the output file and log file for a submitted process are more current than the error file, your process probably is running without error.

Process Queue

To view detailed information about your processes, including the ClassAd requirements for your jobs, type the command condor_q -analyze.

Refer to the Condor Version 6.8.0 Manual for a description of the value that represents why a process was placed on hold or evicted. Go to the following URL for section 2.5, "Submitting a Job," and search for the text JobStatus under the heading "ClassAd Job Attributes":

http://www.cs.wisc.edu/condor/manual/v6.8.0/2_5Submitting_Job.html

For example:

> condor_q -analyze Run analysis summary. Of 43 machines, 43 are rejected by your job's requirements 0 are available to run your job WARNING: Be advised: No resources matched request's constraints Check the Requirements expression below: Requirements = ((Memory > 8192)) && (Disk >= DiskUsage)

Error Log

An error file includes information about any errors occurred when your batch processing executed.

To view the error file for a process and determine where an error occurred, use the cat command. For example:

> cat errorfile

Error in readChar(con, 5) : cannot open the connection

In addition: Warning message:

cannot open compressed file 'Utilization1.RData'

Execution halted

History File

When batch processing completes, Condor removes the cluster from the queue and records information about the processes in the history file. History is displayed for each process on a single line. Information provided includes the following:

ID- The cluster and process IDs of the jobOWNER- The owner of the jobSUBMITTED- The month, day, hour, and minute at which the job was submitted to the queueCPU_USAGE- Remote user central processing unit (CPU) time accumulated by the job to date, in days, hours, minutes, and secondsST- Completion status of the job, whereCis completed andXis removedCOMPLETED- Time at which the job was completedCMD- Name of the executable

To view information about processes that you executed on the Condor system, type the command condor_history. For example:

> condor_history

IDOWNER SUBMITTED RUN_TIME ST COMPLETED CMD

1.0 arose 9/26 11:45 0+00:00:00 C 9/26 11:45 /usr/bin/R --no

2.0 arose 9/26 11:48 0+00:00:01 C 9/26 11:48 /usr/bin/R --no

3.0 arose 9/26 11:49 0+00:00:00 C 9/26 11:50 /usr/bin/R --no

3.1 arose 9/26 11:49 0+00:00:01 C 9/26 11:50 /usr/bin/R --no

6.0 arose 10/3 15:52 0+00:00:00 C 10/3 15:52 /nfs/fs1/home/A

6.1 arose 10/3 15:52 0+00:00:00 C 10/3 15:52 /nfs/fs1/home/A

6.2 arose 10/3 15:52 0+00:00:00 C 10/3 15:52 /nfs/fs1/home/A

6.5 arose 10/3 15:52 0+00:00:00 C 10/3 15:52 /nfs/fs1/home/A

6.3 arose 10/3 15:52 0+00:00:00 C 10/3 15:52 /nfs/fs1/home/A

6.4 arose 10/3 15:52 0+00:00:00 C 10/3 15:52 /nfs/fs1/home/A

6.6 arose 10/3 15:52 0+00:00:01 C 10/3 15:52 /nfs/fs1/home/A

9.0 arose 10/4 11:02 0+00:00:00 C 10/4 11:02 /nfs/fs1/home/A

9.1 arose 10/4 11:02 0+00:00:01 C 10/4 11:02 /nfs/fs1/home/A

9.2 arose 10/4 11:02 0+00:00:00 X ??? /nfs/fs1/home/A

9.3 arose 10/4 11:02 0+00:00:00 X ??? /nfs/fs1/home/A

9.5 arose 10/4 11:02 0+00:00:00 X ??? /nfs/fs1/home/A

9.6 arose 10/4 11:02 0+00:00:00 X ??? /nfs/fs1/home/A

9.4 arose 10/4 11:02 0+00:00:00 X ??? /nfs/fs1/home/A

Search through the history file for your process and cluster IDs to locate information about your jobs.

To view information about all completed processes in a cluster, type the command condor_history <cluster ID>. To view information about one process, type the command condor_history <cluster ID>.<process ID>. For example:

> condor_history 9

IDOWNER SUBMITTED RUN_TIME ST COMPLETED CMD

9.0 arose 10/4 11:02 0+00:00:00 C 10/4 11:02 /nfs/fs1/home/A

9.1 arose 10/4 11:02 0+00:00:01 C 10/4 11:02 /nfs/fs1/home/A

9.2 arose 10/4 11:02 0+00:00:00 X ??? /nfs/fs1/home/A

9.3 arose 10/4 11:02 0+00:00:00 X ??? /nfs/fs1/home/A

9.5 arose 10/4 11:02 0+00:00:00 X ??? /nfs/fs1/home/A

9.6 arose 10/4 11:02 0+00:00:00 X ??? /nfs/fs1/home/A

9.4 arose 10/4 11:02 0+00:00:00 X ??? /nfs/fs1/home/A

Process Log File

A log file includes information about everything that occurred during your cluster processing: when it was submitted, when execution began and ended, when a process was restarted, if there were any issues. When processing finishes, the exit conditions for that process are noted in the log file.

Refer to the Condor Manual for a description of the entries in the process log file. Go to the following URL for section 2.6, "Managing a Job," and go to subsection 2.6.6, "In the log file":

http://research.cs.wisc.edu/htcondor/manual/latest/2_6Managing_Job.html

To view the log file for a process and determine where an error occurred, use the cat command. For example, the following log file indicates that the process completed normally:

> cat log.1 000 (012.001.000) 10/04 12:14:51 Job submitted from host: <10.0.0.47:60603> ... 001 (012.001.000) 10/04 12:15:00 Job executing on host: <10.0.0.61:37097> ... 005 (012.001.000) 10/04 12:15:00 Job terminated. (1) Normal termination (return value 0) Usr 0 00:00:00, Sys 0 00:00:00 - Run Remote Usage Usr 0 00:00:00, Sys 0 00:00:00 - Run Local Usage Usr 0 00:00:00, Sys 0 00:00:00 - Total Remote Usage Usr 0 00:00:00, Sys 0 00:00:00 - Total Local Usage 7 - Run Bytes Sent By Job 163 - Run Bytes Received By Job 7 - Total Bytes Sent By Job 163 - Total Bytes Received By Job ...

Following is an example log file for a process that did not complete execution:

> cat log.4 000 (09.000.000) 09/20 14:47:31 Job submitted from host: <x1.hmdc.harvard.edu> ... 007 (09.000.000) 09/20 15:02:10 Shadow exception! Error from starter on x1.hmdc.harvard.edu: Failed to open 'scratch.1/frieda/workspace/v67/condor- test/test3/run_0/b.input' as standard input: No such file or directory (errno 2) 0 - Run Bytes Sent By Job 0 - Run Bytes Received By Job ...

Held Process

To view information about processes that Condor placed on hold, type condor_q -hold. For example:

> condor_q -hold -- Submitter: vnc.hmdc.harvard.edu : <10.0.0.47:60603> : vnc.hmdc.harvard.edu ID OWNER HELD_SINCEHOLD_REASON 17.0 arose 10/5 12:53via condor_hold (by user arose) 17.1 arose 10/5 12:53via condor_hold (by user arose) 17.2 arose 10/5 12:53via condor_hold (by user arose) 17.3 arose 10/5 12:53via condor_hold (by user arose) 17.4 arose 10/5 12:53via condor_hold (by user arose) 17.5 arose 10/5 12:53via condor_hold (by user arose) 17.6 arose 10/5 12:53via condor_hold (by user arose) 17.7 arose 10/5 12:53via condor_hold (by user arose) 17.9 arose 10/5 12:53via condor_hold (by user arose) 9 jobs; 0 idle, 0 running, 9 held

Refer to the Condor Manual for a description of the value that represents why a process was placed on hold. Go to the following URL for section 2.5, "Submitting a Job," and look for subsection 2.5.2.2, "ClassAd Job Attributes." Look for the entry HoldReasonCode:

http://research.cs.wisc.edu/htcondor/manual/latest/2_5Submitting_Job.html

To place a process on hold, type the command condor_hold <cluster ID>.<process ID>. For example:

> condor_hold 8.33 Job 8.33 held

To place on hold any processes not completed in a full cluster, type condor_hold <cluster ID>. For example:

> condor_hold 8 Cluster 8 held.

The status of those uncompleted processes in cluster 8 is now H (on hold):

> condor_q -- Submitter: vnc.hmdc.harvard.edu : <10.0.0.47:60603> vnc.hmdc.harvard.edu ID OWNER SUBMITTED RUN_TIME STPRISIZECMD 8.2 sspade 10/4 11:19 0+00:00:00 H 0 9.8 dwarves.pl 8.5 sspade 10/4 11:19 0+00:00:00 H 0 9.8 dwarves.pl 8.6 sspade 10/4 11:19 0+00:00:00 H 0 9.8 dwarves.pl 3 jobs; 0 idle, 0 running, 3 held

To release a process from hold, type the command condor_release <cluster ID>.<process ID>. For example:

> condor_release 8.33 Job 8.33 released.

To release the full cluster from hold, type the command condor_release <cluster ID>. For example:

> condor_release 8 Cluster 8 released.

You can instruct the Condor system to place your batch processing on hold if it spends a specified amount of time suspended (that is, not processing). For example, include the following attribute in your submit file to place your jobs on hold if they spends more than 50 percent of their time suspended:

Periodic_hold = CumulativeSuspensionTime > (RemoteWallClockTime /2.0)